1. Monitoring system statistics

One of the main tasks as a system administrator is to check and manage whether the system is operating properly through statistics. This time, let's take a look at a script that manages and reports statistics that should be basically managed through Shell Sciprt.

disk space monitoring

To monitor disk space with the command 'df', use the command. Below is the output of the df command.

|

$ df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/hda1 3197228 2453980 580836 81% /

varrun 127544 100 127444 1% /var/run

varlock 127544 4 127540 1% /var/lock

udev 127544 44 127500 1% /dev

devshm 127544 0 127544 0% /dev/shm

/dev/hda3 801636 139588 621328 19% /home

|

In the example above, let's create a script syntax that can monitor the usage of the root directory using the sed and gwak we learned earlier.

|

$ df | sed -n ’/\/$/p’ | gawk ’{print $5}’ | sed ’s/%//’

81

$

|

You can see that the '/' directory is at 81% usage.

Create a script

In the above example, I made a script to monitor the disk. Now, let's create a simple script that stores the extracted data in a variable and sends a message to the administrator when the amount of usage exceeds a predetermined amount.

|

cat diskmon

#!/bin/bash

# monitor available disk space

SPACE=`df | sed -n ’/\/$/p’ | gawk ’{print $5}’ | sed ’s/%//`

if [ $SPACE -ge 90 ]

then

echo "Disk space on root at $SPACE% used" | mail -s "Disk warning"

rich

fi

$

$

|

Now, if you register the script to be created above in crontab and use it, you can automatically monitor it. Please refer to the previous Chapter 1 for how to use crontab. Below is an example of registering in cron tab.

|

30 0 * * * /home/rich/diskmon ==> Check once every 12:30 every day

30 0,8,12,16 * * * /home/rich/diskmon ==> Check 4 times a day

|

grab disk log

As a server administrator, it is also important to know who is using the disk and how much. But unfortunately, you can't get this information through the command. You can achieve this by using a script. First, the 'du' command shows the usage of files and directories by user. The -s option is used to summarize usage. Let's look at an example below.

|

$# du -s /home/*

40 /home/barbara

9868 /home/jessica

40 /home/katie

40 /home/lost+found

107340 /home/rich

5124 /home/test

#

|

This will be different for each Linux system, but lost+found is sometimes included. To remove it, you can remove it using grep and the -v option as shown below.

|

# du -s /home/* | grep -v lost

40 /home/barbara

9868 /home/jessica

40 /home/katie

107340 /home/rich

5124 /home/test

#

|

And now we remove the unnecessary information, the path, from the above result value and only show the username.

|

# du -s /home/* | grep -v lost | sed ’s/\/home\//’

40 barbara

9868 jessica

40 katie

107340 rich

5124 test

#

|

Now, let's make it a little nicer in descending order.

|

# du -s /home/* | grep -v lost | sed ’s/\/home\///’ | sort -g -r

107340 rich

9868 jessica

5124 test

40 katie

40 barbara

#

|

However, what is lacking here is the total amount of disk usage used by all users. You can use the command below to get this information.

|

# du -s /home

122420 /home

#

|

Now, by combining the above commands, let's write a script that contains the following three pieces of information.

■ First line to display column name

■ Report body

■ Total usage Let's write the script as below.

Let's write the script as below.

|

# cat diskhogs

#!/bin/bash

# calculate disk usage and report per user

TEMP=`mktemp -t tmp.XXXXXX`

du -s /home/* | grep -v lost | sed ’s/\/home\///’ | sort -g -r ›

$TEMP

TOTAL=`du -s /home | gawk ’{print $1}’`

cat $TEMP | gawk -v n="$TOTAL" ’

BEGIN {

print "Total Disk Usage by User";

print "User\tSpace\tPercent"

}

{

printf "%s\t%d\t%6.2f%\n", $2, $1, ($1/n)*100

}

END {

print "--------------------------";

printf "Total\t%d\n", n

}’

rm -f $TEMP

#

|

Now let's try to run the above script.

|

# ./diskhogs

Total Disk Usage by user

User Space Percent

rich 107340 87.68%

jessica 9868 8.06%

test 5124 4.19%

katie 40 0.03%

barbara 40 0.03%

--------------------------

Total 122420

#

|

Now that you have a script in the format you want, you can use it by registering it in Crontab.

CPU and memory usage monitoring

Now let's create a script that can monitor CPU and memory usage. For this, let's try to use the command called UPTIME. UPTIME command returns the following 4 data information as result values.

■ The current time

■ The number of days, hours, and minutes the system was operated

■ Number of users currently logged in

■ Average load every 1, 5, and 15 minutes

|

$ uptime

09:57:15 up 3:22, 3 users, load average: 0.00, 0.08, 0.28

$

|

Along with this, there is the vmstat command among the commands that show the usage of cpu and memory well. Below is the output of the vmstat command. If vmstat is run without options, it shows the average value since the last system reboot. If you want to get the current value, you have to use it together with the line parameter.

Let's see the output below.

|

$ vmstat

procs- ----memory--------- ---swap-- --io-- --system-- -----cpu------

r b swpd free buff cache si so bi bo in cs us sy id wa st

0 0 178660 13524 4316 72076 8 10 80 22 127 124 3 1 92 4 0

$

$ vmstat 1 2

procs- ----memory--------- ---swap-- --io-- --system-- -----cpu------

r b swpd free buff cache si so bi bo in cs us sy id wa st

0 0 178660 13524 4316 72076 8 10 80 22 127 124 3 1 92 4 0

0 0 178660 12845 4245 71890 8 10 80 22 127 124 3 1 86 10 0

$

|

In the output result, the first line shows the average value after system reboot, and the current value is displayed on the second line. And the meaning of each column above is as shown in the table below.

Now let's use this to create the necessary script First, remove the first line showing the name of each column, and then to get only the second line showing the current value in the output result, you can do as follows.

|

$ vmstat | sed -n ’/[0-9]/p’ | sed -n ’2p’

0 0 178660 12845 4245 71890 8 10 80 22 127 124 3 1 86 10 0

$

|

Now, to display the extraction time of the data extracted above, we will use the data command as follows

|

$ date +"%m/%d/%Y %k:%M:%S"

02/05/2008 19:19:26

$

|

Now let's create the script.

|

$ cat capstats

#!/bin/bash

# script to capture system statistics

OUTFILE=/home/rich/capstats.csv

DATE=`date +%m/%d/%Y`

TIME=`date +%k:%M:%S`

TIMEOUT=`uptime`

VMOUT=`vmstat 1 2`

USERS=`echo $TIMEOUT | gawk ’{print $4}’`

LOAD=`echo $TIMEOUT | gawk ’{print $9}’ | sed ’s/,//’`

FREE=`echo $VMOUT |sed -n ’/[0-9]/p’ |sed -n ’2p’ |gawk ’{print $4}’`

IDLE=`echo $VMOUT |sed -n ’/[0-9]/p’ |sed -n ’2p’|gawk ’{print $15}’`

echo "$DATE,$TIME,$USERS,$LOAD,$FREE,$IDLE" ›› $OUTFILE

$

|

If you want to make the output more beautiful using gwak, you can use it like this:

|

$ cat capstats.csv | gawk -F, ’{printf "%s %s - %s\n", $1, $2, $4}’

02/06/2008 10:39:57 - 0.26

02/06/2008 10:41:52 - 0.14

02/06/2008 10:50:01 - 0.06

02/06/2008 11:00:01 - 0.18

02/06/2008 11:10:01 - 0.03

02/06/2008 11:20:01 - 0.07

02/06/2008 11:30:01 - 0.03

$

|

You can also use the command result format using HTML. In this case, you can create it by using the Table tag. Let's take a look at the script below.

|

$ cat reportstats

#!/bin/bash

# parse capstats data into daily report

FILE=/home/rich/capstats.csv

TEMP=/home/rich/capstats.html

MAIL=`which mutt`

DATE=`date +"%A, %B %d, %Y"`

echo "‹html›‹body›‹h2›Report for $DATE‹/h2›" › $TEMP

echo "‹table border=\"1\"›" ›› $TEMP

echo "‹tr›‹td›Date‹/td›‹td›Time‹/td›‹td›Users‹/td›" ›› $TEMP

echo "‹td›Load‹/td›‹td›Free Memory‹/td›‹td›%CPU Idle‹/td›‹/tr›" ›› $TEMP

cat $FILE | gawk -F, ’{

printf "‹tr›‹td›%s‹/td›‹td›%s‹/td›‹td›%s‹/td›", $1, $2, $3;

printf "‹td›%s‹/td›‹td›%s‹/td›‹td›%s‹/td›\n‹/tr›\n", $4, $5, $6;

}’ ›› $TEMP

echo "‹/table›‹/body›‹/html›" ›› $TEMP

$MAIL -a $TEMP -s "Stat report for $DATE" rich ‹ /dev/null

rm -f $TEMP

$

|

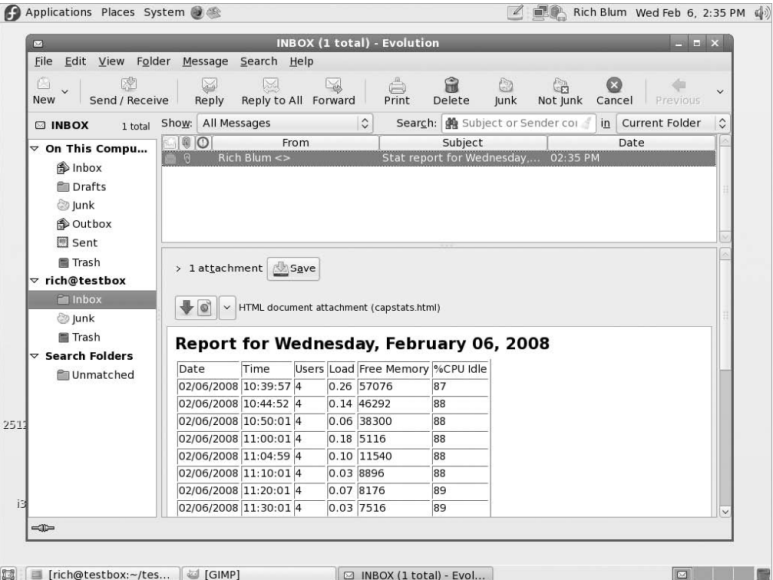

If you run the result value, you can receive the html report by e-mail as shown below.

2. Perform a backup

As a system administrator, the most important task is to preserve and store data. To this end, this time, let's take a look at the contents related to backup.

Archiving data files

Taking a snapshot of your working directory and keeping it in a safe place is useful as an administrator to keep your data. Now let's use a script to make it run automatically.

First of all, if you list the necessary tasks, the order is as follows.

1) Use the tar command to create a single file of all files in the working directory.

$ tar -cf archive.tar /home/rich/test 2› /dev/null

2) Compress to reduce the size of the created file

$ gzip archive.tar

3) Create a unique file name using the archive file name

$ DATE=`date +%y%m%d`

$ FILE=tmp$DATE

$ echo $FILE

tmp080206

$

Create a Daily Archive script.

Let's take a look at the script that creates the archive file first.

|

$ cat archdaily

#!/bin/bash

# archive a working directory

DATE=`date +%y%m%d`

FILE=archive$DATE

SOURCE=/home/rich/test

DESTINATION=/home/rich/archive/$FILE

tar -cf $DESTINATION $SOURCE 2› /dev/null

gzip $DESTINATION

$

|

If you run the above script, you can see the result as below.

|

$ ./archdaily

$ ls -al /home/rich/archive

total 24

drwxrwxr-x 2 rich rich 4096 2008-02-06 12:50 .

drwx------ 37 rich rich 4096 2008-02-06 12:50 ..

-rw-rw-r-- 1 rich rich 3046 2008-02-06 12:50 archive080206.gz

$

|

Creating Hourly Archive Scripts

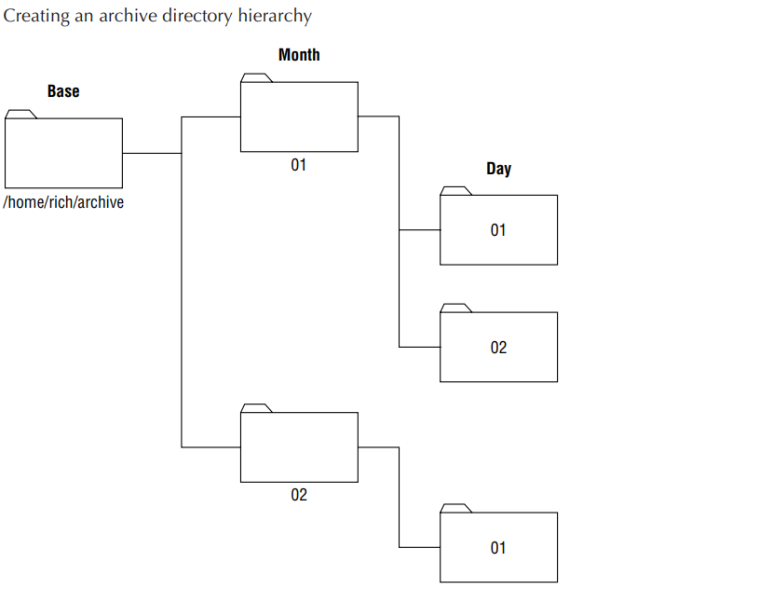

When creating archive files by time, the number of files becomes too large, making it difficult to manage. For this, the place to store the archive file should be managed by creating a structure by month/day/hour.

Below is the directory structure to be created.

Now, let's create a script with the above structure to create a directory and save an archive file.

|

$ cat archhourly

#!/bin/bash

# archive a working directory hourly

DAY=`date +%d`

MONTH=`date +%m`

TIME=`date +%k%M`

SOURCE=/home/rich/test

BASEDEST=/home/rich/archive

mkdir -p $BASEDEST/$MONTH/$DAY

DESTINATION=$BASEDEST/$MONTH/$DAY/archive$TIME

tar -cf $DESTINATION $SOURCE 2› /dev/null

gzip $DESTINATION

$

|

If you run the above script.

|

$ ./archhourly

$ ls -al /home/rich/archive/02/06

total 32

drwxrwxr-x 2 rich rich 4096 2008-02-06 13:20 .

drwxrwxr-x 3 rich rich 4096 2008-02-06 13:19 ..

-rw-rw-r-- 1 rich rich 3145 2008-02-06 13:19 archive1319.gz

$ ./archhourly

$ ls -al /home/rich/archive/02/06

total 32

drwxrwxr-x 2 rich rich 4096 2008-02-06 13:20 .

drwxrwxr-x 3 rich rich 4096 2008-02-06 13:19 ..

-rw-rw-r-- 1 rich rich 3145 2008-02-06 13:19 archive1319.gz

-rw-rw-r-- 1 rich rich 3142 2008-02-06 13:20 archive1320.gz

$

|

Now, let's create a script that sends the archive file by e-mail.

|

$ cat mailarch

#!/bin/bash

# archive a working directory and e-mail it out

MAIL=`which mutt`

DATE=`date +%y%m%d`

FILE=archive$DATE

SOURCE=/home/rich/test

DESTINATION=/home/rich/archive/$FILE

ZIPFILE=$DESTINATION.zip

tar -cf $DESTINATION $SOURCE 2› /dev/null

zip $ZIPFILE $DESTINATION

$MAIL -a $ZIPFILE -s "Archive for $DATE" rich@myhost.com ‹ /dev/null

$

|

Now, let's finish this post with the Shell Script. I hope that it will be useful for your work.

'Shell Script' 카테고리의 다른 글

| Chapter 20 Using E-Mail (101) | 2023.06.21 |

|---|---|

| Chapter 19 Using Web (117) | 2023.06.20 |

| Chapter 18 using Database (57) | 2023.06.19 |

| 17 Advance gawk (4) | 2022.09.03 |

| 16 Advanced Sed (1) | 2022.08.31 |